ADAS & Object Detection Via Camera Systems: The Rapid Development

At about the same time as the introduction of the first Tesla Model S, another new name appeared in the automotive industry: Mobileye. While the focus of many other developers of sensors for object detection was mainly on large numbers and enormous amounts of pre-loaded data (3D maps of the environment), Mobileye mainly focused on one camera system (the mobile eye) that can handle real-time situations completely by itself. In this article we discuss the different versions of autonomous driving that this makes possible and how this development will continue.

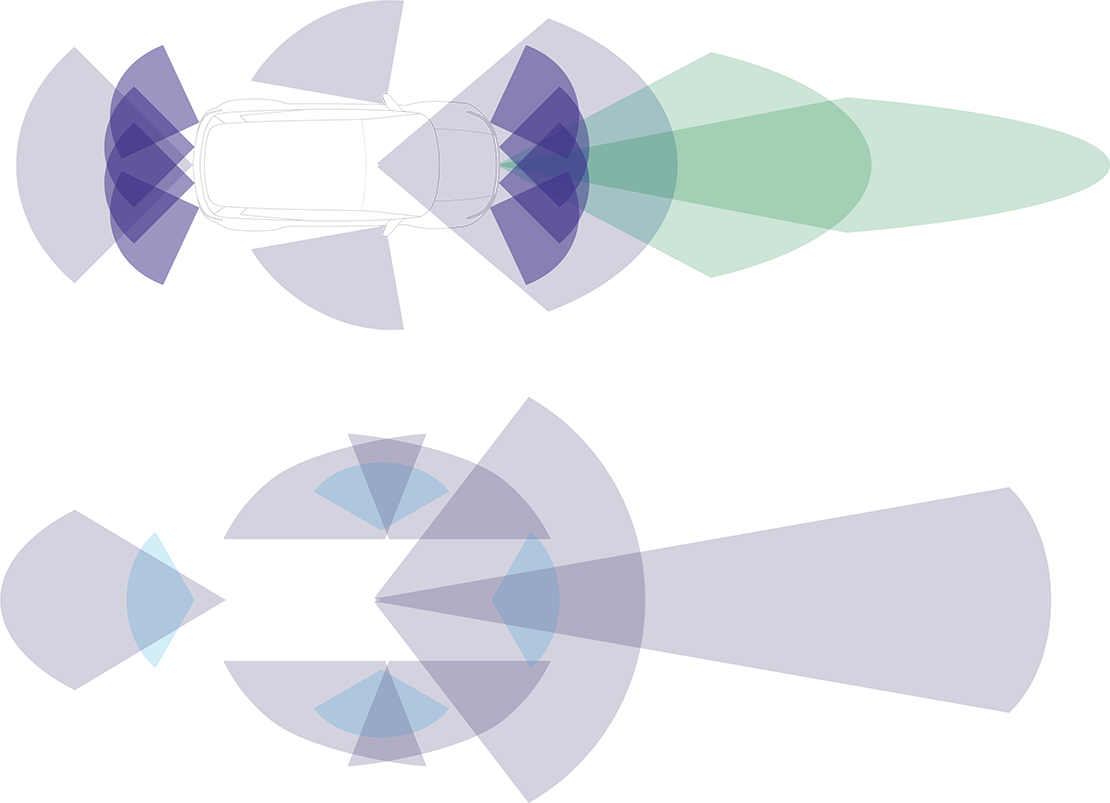

Image: Schematic representation of ADAS traditional operation (top) versus ADAS operation according to Mobileye (bottom)

Mobileye EyeQ: Autonomy level 2+

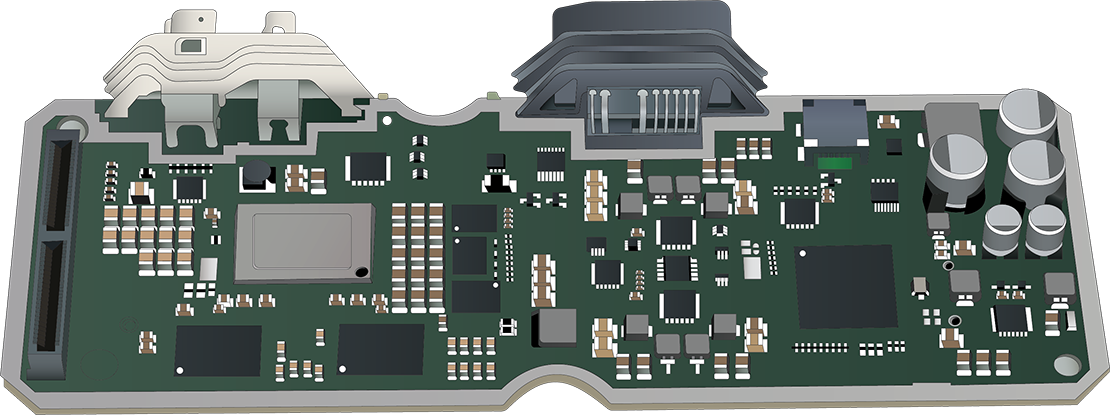

The EyeQ is the basis of Mobileye's camera-only technology: a specially developed SoC (System on Chip). Immediately upon its introduction in 2007, the EyeQ attracted the attention of several major car manufacturers, who were looking for a partner that could supply a complete ADAS system. More than 100 million (!) EyeQs have now been sold worldwide, spread over more than 700 car models from more than 35 different car manufacturers. The latest top model in 2023, the EyeQ6H, has its own internal image signal processor (ISP), a graphics processor (GPU) and a video encoder. This makes it possible to control systems with autonomy level 2+. Even the most premium forms of ADAS are no problem for this SoC. With hardware of this caliber, it probably comes as no surprise that this technology has a lot of functionality. Functions such as lane departure warning, lane keeping assist, automatic emergency braking, front collision warning, adaptive cruise control, traffic sign recognition, high beam assist and park assist are all possible with the EyeQ SoC. In certain cases, the central camera still receives assistance from radar.

Image: A look inside the EyeQ

Mobileye REM: Autonomy level 3

In order to reach autonomy level 3 or higher without using many sensors or large-scale 3D maps, Mobileye looked for an alternative way of obtaining prior knowledge, with which EyeQ would have more information than what the camera had in view at that moment.

The solution came in 2018 in the form of Road Experience Management (REM). Basically, this is a system in which every car with a new EyeQ SoC continuously stores information about the world that the camera sees. All this information is collected centrally and converted into a Roadbook: its own real-time map in which not only the environment is known, but it also becomes clear how and with what positioning the road section is used. All this information is then shared Over-The-Air (OTA) with the other EyeQ vehicles, which can then make better decisions in autonomous mode.

You can perhaps imagine that, given the enormous numbers of EyeQ SoCs in circulation, this will quickly lead to a complete and practical system. Especially because this system can also estimate local driving behavior to a certain extent and adapt autonomous driving behavior accordingly. For example, quick merging is much more important in the middle of a busy city than somewhere in the countryside.

Mobileye SuperVision ADAS

The more ADAS can look around, the better such a system works and the more functionalities are possible. SuperVision was therefore introduced in collaboration with car manufacturer Geely: a very extensive form of ADAS with no fewer than 7 environmental cameras, 4 parking cameras and 2 SoCs.

This massive upgrade enables the SuperVision ADAS to respond much more like a physical driver would. For example, if someone is parked along the road with the door open, the system will keep a little more (sideways) distance. And if a pedestrian walks close to the road section, the vehicle reduces its speed. The system is also able to anticipate traffic situations such as merging and objects suddenly changing direction much better. All in all, a nice development.

Radar and LiDAR as backup

In order for vehicles to drive fully autonomously (level 5), an enormous amount of security must be built into the systems. And if, like Mobileye, you only rely on cameras, you will quickly run into problems. Not because autonomous driving using cameras alone is not possible, but purely because there is no backup to check whether the cameras see the correct thing and the system therefore makes the correct assessment. That is why the choice was made to use both radar and LiDAR. The approach is just very different, as the table below also shows. Mobileye does not see radar and LiDAR as an addition to complete the information, but trusts its own camera system enough to see radar and LiDAR purely as a means of control. They call this 'true redundancy'.

Nvidia is also involved in autonomous driving

While Mobileye mainly focuses on a universally applicable system that can be implemented relatively easily anywhere, tech giant Nvidia has always viewed the problems surrounding autonomous driving and the associated enormous amount of data differently. Because Nvidia has its roots in the (graphics) computer industry, they do not see object detection as a separate system, but as part of the bigger picture: the entire car. Actually, a bit like a graphics card is a part of a complete PC.

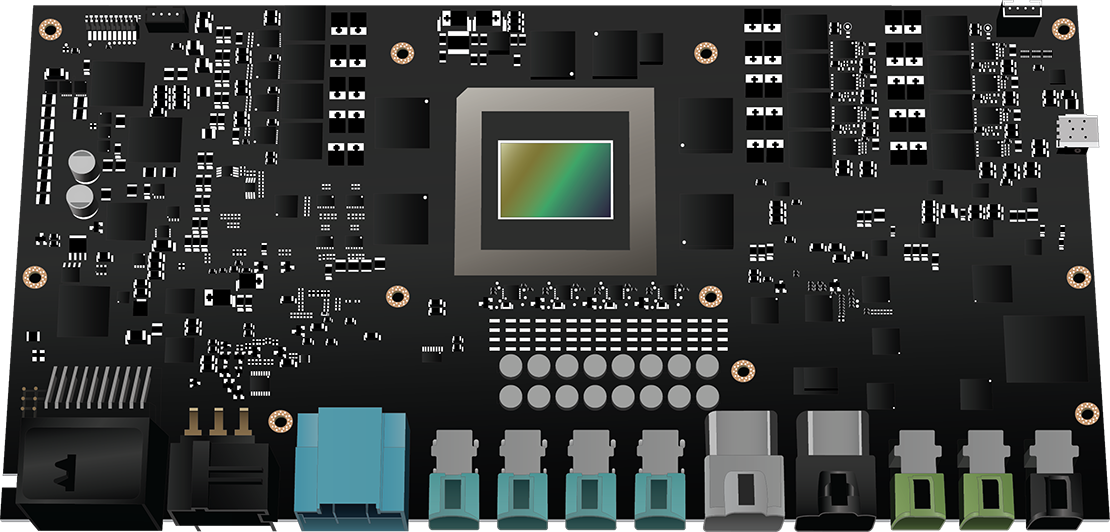

Image: The Nvidia DRIVE Thor in detail

In September 2022, Nvidia announced the “DRIVE Thor”, a comprehensive central computer that also controls systems such as autonomous driving and parking, occupant monitoring, infotainment and the digital instrument cluster. The enormous computing power (2000 teraflops) is Thor's strong point and makes several separate control units completely unnecessary. The platform also allows multi-domain computing, which, for example, allows autonomous driving to function completely independently and therefore safely in addition to the other functions within this supercomputer.

How object detection will influence engine management

It has probably become clear to you that all these systems can enormously influence the choices of the ECU. The message we want to convey with this information is that diagnosing with this new technology will never be the same again: The accelerator pedal is no longer the only input to which the engine management must respond. Finding the cause of a malfunction therefore becomes a complex story: Is the ECU really defective? Or is it simply not receiving the correct input? And is the cause elsewhere in the car?

da

da de

de es

es fr

fr it

it nb

nb nl

nl pt

pt sv

sv fi

fi